Monday's Musings: Will Generative AI Drive Humanity Into The Dark Ages Of Knowledge?

Big Brains In AI Discuss The Risks of Generative AI At Reinvent Futures Event

On July 27th, 2023, almost 300 people gathered at Shack15 in the Ferry building in San Francisco to discuss the actual risks of Generative AI. The event hosted by Reinvent Futures with Peter Leyden and Joe Boggio brought together the biggest minds on AI for an evening of deep discussion.

Speakers included:

- Brian Behlendorf

- Gary Bowles

- Eli Chen

- Earl Comstock

- Daniel Erasmus

- CC Gong

- Dazza Greenwood

- Jerry Kaplan

- De Kai

- Fiona Ma

- Ethan Shaotran

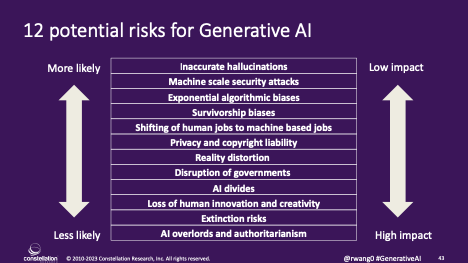

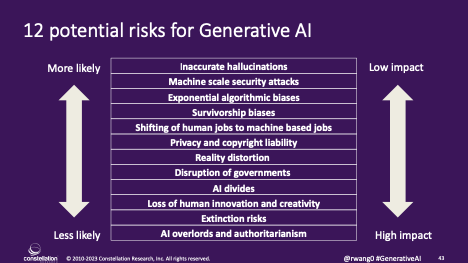

The World Can Expect Twelve Major Risks of AI

In general, the opportunities for AI are exponential. There is much good ahead. As with all technologies, the humans behind them can turn them into a weapon or a tool to advance humanity. This forum focused on the risks as a balance to the opportunities of AI. The discussion included many of Constellation's twelve major risks of AI (in order of most likely to least likely):

- Inaccurate hallucinations. By definition, generative AI will hallucinate due to limited data and training. These risks require better training models, more training cycles, and dedication to address false positives and false negatives before these systems are put into the wild.

- Machine scale security attacks. Security attacks using offense tools by AI will include vulnerability scanning, vishing, biometric authentication by pass, malware generation, and polymorphic malware.

- Exponential algorithmic biases. Even with large data sets, AI systems can make decisions that are systematically unfair to certain cohorts, groups of people, or scenarios. Some examples include reporting, selection, implicit, and group attribution bias.

- Survivorship biases. History is written by the victors. While survivorship bias is one algorithmic bias, this risk warrants it's own listing. The winners and their promoted narratives will create a bias in the details of a success or failure. Decisions that are based on incomplete or inaccurate information create risks in model formation.

- Shifting of human jobs to machine based jobs. Expect massive displacement in jobs as organizations determine when they will chose intelligent automation, augmentation of machines with humans, augmentation of humans with machines, or the human touch. Organizations must determine when they want to involve a human and when stakeholders will pay for human judgment and interaction.

- Privacy and copyright liability. Many models have been trained on publicly available information. However, training on private datasets without permission will create major risks in liability.

- Reality distortion. AI will create misinformation and disinformation overload. The generation of exponential amounts of misinformation and disinformation continues the onslaught of a warped presence. This distortion field will hinder human judgment and obfuscate reality.

- Disruption of governments. With the persistence of deep fakes, lack of lineage of content, poor verification means, and few tools to combat disinformation, some futurists and security experts expect the next revolution to be instigated by AI tools.

- AI divides. From social inequities to uneven power dynamics, AI can create a scenario where only those with access to large data troves, massive network interactions, low cost compute power, and infinite energy will win. This pits large companies against small companies and governments versus individuals with no recourse.

- Loss of human innovation and creativity. An over reliance on AI will result in a dependence to mitigate risks at the expense of innovation and disruption. Society will choose stability and reliability and lose innate human curiosity and appetite for risk.

- Extinction risks. AI has the capability to create a mass human extinction risk and society should be cognizant and proactive in mitigation measures. Recently, the Center for AI Safety put out a statement signed by over 350 AI experts, influencers, policymakers, and the public states that "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war".

- AI overlords and authoritarianism. While the likelihood that a General AI could take over the world or society today, the rapid digitization of the physical world increases the possibility of an AI war. Large organizations with access to the insights of AI and the means to indirectly and directly influence behaviors will have to be combated by systems that protect individual freedoms and liberties by design.

The Race For Generative AI Dominance May Create A Dark Ages Of Public Information

The Internet brought a renaissance of information to the masses. However, AI will create a dark ages of public information. One can speculate that competitors and governments will limit publicly available data for training by rivals in order to "protect" their intellectual property, competitive advantage, or national security. Publicly available information might be limited to ads, promotional marketing, or only the information that someone wants to reveal. Other than government mandated information and disclosures, expect most data to remain in closed silos.

Thus, access to human knowledge will be limited to private networks where the individual's data is the product or the data is being used to incriminate the individual in their governments. This could lead to the dark ages of publicly available information unless policy makers agree on how to bring human knowledge back to the public.

The Bottom Line: Policy Makers Must Consider What Rights Humanity Has In The Post AI World Before Its Too Late

Policymakers have a limited window in the next 18 to 24 months to convene a wide range of futurists, experts, academics, legal scholars, anthropologists, and other disciplines to consider policies that will define, advance, and preserve humanity in an age of AI. For the United States of America, one gathering could convene a convention to discuss how to enhance the Constitution and Bill of Rights in an age of AI. Another global agreement could be reached as to how knowledge is returned back to humanity and society. Similar to intellectual property law with patents, trademarks, and copyright, we may see the insights in LLM's be turned over to the public based on criticality. Some insights may have 30 second protection, others 30 years.

Your POV

How will we create an even playing field for capitalism to survive in an age of AI? What risks do you see with AI? What rights should humanity put in place in advance of AI?

Add your comments to the blog or reach me via email: R (at) ConstellationR (dot) com or R (at) SoftwareInsider (dot) org. Please let us know if you need help with your strategy efforts. Here’s how we can assist:

- Developing your AI, digital business, and experience strategy

- Connecting with other pioneers

- Sharing best practices

- Vendor selection

- Implementation partner selection

- Providing contract negotiations and software licensing support

- Demystifying software licensing

Reprints can be purchased through Constellation Research, Inc. To request official reprints in PDF format, please contact Sales.

Disclosures

Although we work closely with many mega software vendors, we want you to trust us. For the full disclosure policy,stay tuned for the full client list on the Constellation Research website. * Not responsible for any factual errors or omissions. However, happy to correct any errors upon email receipt.

Constellation Research recommends that readers consult a stock professional for their investment guidance. Investors should understand the potential conflicts of interest analysts might face. Constellation does not underwrite or own the securities of the companies the analysts cover. Analysts themselves sometimes own stocks in the companies they cover—either directly or indirectly, such as through employee stock-purchase pools in which they and their colleagues participate. As a general matter, investors should not rely solely on an analyst’s recommendation when deciding whether to buy, hold, or sell a stock. Instead, they should also do their own research—such as reading the prospectus for new companies or for public companies, the quarterly and annual reports filed with the SEC—to confirm whether a particular investment is appropriate for them in light of their individual financial circumstances.

Copyright © 2001 – 2023 R Wang and Insider Associates, LLC All rights reserved.

Contact the Sales team to purchase this report on a a la carte basis or join the Constellation Executive Network

R "Ray" Wang

R "Ray" Wang R "Ray" Wang

R "Ray" Wang